[K8S Deploy Study by Gasida] - RKE2 - 2 실습환경 배포 및 확인

[K8S Deploy Study by Gasida] - RKE2 - 2 실습환경 배포 및 확인

실습환경 배포

- Vagrantfile : Rocky Linux 9

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

# Base Image https://portal.cloud.hashicorp.com/vagrant/discover/bento/rockylinux-9

BOX_IMAGE = "bento/rockylinux-9" # "bento/rockylinux-10.0"

BOX_VERSION = "202510.26.0"

N = 2 # max number of Node

Vagrant.configure("2") do |config|

# Nodes

(1..N).each do |i|

config.vm.define "k8s-node#{i}" do |subconfig|

subconfig.vm.box = BOX_IMAGE

subconfig.vm.box_version = BOX_VERSION

subconfig.vm.provider "virtualbox" do |vb|

vb.customize ["modifyvm", :id, "--groups", "/RKE2-Lab"]

vb.customize ["modifyvm", :id, "--nicpromisc2", "allow-all"]

vb.name = "k8s-node#{i}"

vb.cpus = 4

vb.memory = 4096

vb.linked_clone = true

end

subconfig.vm.host_name = "k8s-node#{i}"

subconfig.vm.network "private_network", ip: "192.168.10.1#{i}"

subconfig.vm.network "forwarded_port", guest: 22, host: "6000#{i}", auto_correct: true, id: "ssh"

subconfig.vm.synced_folder "./", "/vagrant", disabled: true

subconfig.vm.provision "shell", path: "init_cfg.sh" , args: [ N ]

end

end

end

- init_cfg.sh

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

#!/usr/bin/env bash

echo ">>>> Initial Config Start <<<<"

echo "[TASK 1] Change Timezone and Enable NTP"

timedatectl set-local-rtc 0

timedatectl set-timezone Asia/Seoul

echo "[TASK 2] Disable firewalld and selinux"

systemctl disable --now firewalld >/dev/null 2>&1

setenforce 0

sed -i 's/^SELINUX=enforcing/SELINUX=permissive/' /etc/selinux/config

echo "[TASK 3] Disable and turn off SWAP & Delete swap partitions"

swapoff -a

sed -i '/swap/d' /etc/fstab

sfdisk --delete /dev/sda 2 >/dev/null 2>&1

partprobe /dev/sda >/dev/null 2>&1

echo "[TASK 4] Config kernel & module"

cat << EOF > /etc/modules-load.d/k8s.conf

overlay

br_netfilter

#vxlan

EOF

modprobe overlay >/dev/null 2>&1

modprobe br_netfilter >/dev/null 2>&1

#modprobe vxlan >/dev/null 2>&1

cat << EOF >/etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

sysctl --system >/dev/null 2>&1

echo "[TASK 5] Setting Local DNS Using Hosts file"

sed -i '/^127\.0\.\(1\|2\)\.1/d' /etc/hosts

for (( i=1; i<=$1; i++ )); do echo "192.168.10.1$i k8s-node$i" >> /etc/hosts; done

echo "[TASK 6] Install Helm"

curl -fsSL https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3 | DESIRED_VERSION=v3.20.0 bash >/dev/null 2>&1

echo "[TASK 7] Setting SSHD"

cat << EOF >> /etc/ssh/sshd_config

PermitRootLogin yes

PasswordAuthentication yes

EOF

systemctl restart sshd >/dev/null 2>&1

echo "[TASK 8] Install packages"

dnf install -y conntrack python3-pip git >/dev/null 2>&1

echo "[TASK 9] NetworkManager to ignore calico/flannel related network interfaces"

# https://docs.rke2.io/known_issues#networkmanager

cat << EOF > /etc/NetworkManager/conf.d/k8s.conf

[keyfile]

unmanaged-devices=interface-name:flannel*;interface-name:cali*;interface-name:tunl*;interface-name:vxlan.calico;interface-name:vxlan-v6.calico;interface-name:wireguard.cali;interface-name:wg-v6.cali

EOF

systemctl reload NetworkManager

echo "[TASK 11] Install K9s"

CLI_ARCH=amd64

if [ "$(uname -m)" = "aarch64" ]; then CLI_ARCH=arm64; fi

wget -P /tmp https://github.com/derailed/k9s/releases/latest/download/k9s_linux_${CLI_ARCH}.tar.gz >/dev/null 2>&1

tar -xzf /tmp/k9s_linux_${CLI_ARCH}.tar.gz -C /tmp

chown root:root /tmp/k9s

mv /tmp/k9s /usr/local/bin/

chmod +x /usr/local/bin/k9s

echo "[TASK 12] ETC"

echo "sudo su -" >> /home/vagrant/.bashrc

echo ">>>> Initial Config End <<<<"

1

2

3

4

5

6

7

8

mkdir k8s-rke2

cd k8s-rke2

curl -O https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/k8s-rke2/Vagrantfile

curl -O https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/k8s-rke2/init_cfg.sh

vagrant up

vagrant status

RKE2 서버 노드 설치

노드 설치

1

2

3

curl -sfL https://get.rke2.io --output install.sh

chmod +x install.sh

INSTALL_RKE2_CHANNEL=v1.33 ./install.sh

INSTALL_RKE2_VERSION- GitHub에서 다운로드할 RKE2 버전 - stable(기본값),

INSTALL_RKE2_TYPE- 생성할 systemd 서비스의 유형 - server(기본값), agent

INSTALL_RKE2_CHANNEL_URL- RKE2 다운로드 URL을 가져오기 위한 채널 URL (기본값: https://update.rke2.io/v1-release/channels ()

INSTALL_RKE2_CHANNEL- RKE2 다운로드 URL을 가져오는 데 사용할 채널 - stable(기본값), latest, testing

INSTALL_RKE2_METHOD- 사용할 설치 방법 - rpm(RPM 기반 시스템 경우 기본값), tar(그외 경우 기본값)

RKE2 설정

- cni 플러그인(canal) 등

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

cat << EOF > /etc/rancher/rke2/config.yaml

write-kubeconfig-mode: "0644"

debug: true

cni: canal

bind-address: 192.168.10.11

advertise-address: 192.168.10.11

node-ip: 192.168.10.11

disable-cloud-controller: true

disable:

- servicelb

- rke2-coredns-autoscaler

- rke2-ingress-nginx

- rke2-snapshot-controller

- rke2-snapshot-controller-crd

- rke2-snapshot-validation-webhook

EOF

- canal cni 플러그인 helm chart values 파일 작성

1

2

3

4

5

6

7

8

9

10

11

12

13

mkdir -p /var/lib/rancher/rke2/server/manifests/

cat << EOF > /var/lib/rancher/rke2/server/manifests/rke2-canal-config.yaml

apiVersion: helm.cattle.io/v1

kind: HelmChartConfig

metadata:

name: rke2-canal

namespace: kube-system

spec:

valuesContent: |-

flannel:

iface: "enp0s9"

EOF

- coredns 의 autoscaler 미설치를 위한 helm chart values 파일 작성

1

2

3

4

5

6

7

8

9

10

11

cat << EOF > /var/lib/rancher/rke2/server/manifests/rke2-coredns-config.yaml

apiVersion: helm.cattle.io/v1

kind: HelmChartConfig

metadata:

name: rke2-coredns

namespace: kube-system

spec:

valuesContent: |-

autoscaler:

enabled: false

EOF

RKE2 시작

- RKE2 시작 : 2분 정도 소요 -> coredns 파드까지 정상화 대략 1~2분 추가 소요

1

2

systemctl enable --now rke2-server.service

systemctl status rke2-server --no-pager

- 프로세스 확인

1

2

pstree -a | grep -v color | grep 'rke2$' -A5

pstree -a | grep -v color | grep 'containerd-shim ' -A2

- 신규 터미널 창에서 모니터링

1

2

watch -d pstree -a

journalctl -u rke2-server -f

- 자격증명 파일 복사

1

2

3

mkdir ~/.kube

ls -l /etc/rancher/rke2/rke2.yaml

cp /etc/rancher/rke2/rke2.yaml ~/.kube/config

1

2

3

4

5

6

7

8

# /etc/rancher 디렉터리 확인

tree /etc/rancher/

cat /etc/rancher/node/password

cat /etc/rancher/rke2/config.yaml

cat /etc/rancher/rke2/rke2-pss.yaml

# 바이너리 파일 확인

tree /var/lib/rancher/rke2/bin/

1

2

3

4

5

6

7

8

9

10

11

# PATH 안 건드리고 표준 위치로 바이너리 노출 설정 : 심볼릭 링크 방식

ln -s /var/lib/rancher/rke2/bin/containerd /usr/local/bin/containerd

ln -s /var/lib/rancher/rke2/bin/kubectl /usr/local/bin/kubectl

ln -s /var/lib/rancher/rke2/bin/crictl /usr/local/bin/crictl

ln -s /var/lib/rancher/rke2/bin/runc /usr/local/bin/runc

ln -s /var/lib/rancher/rke2/bin/ctr /usr/local/bin/ctr

ln -s /var/lib/rancher/rke2/agent/etc/crictl.yaml /etc/crictl.yaml

runc --version

containerd --version

kubectl version

- 편의성 설정

1

2

3

4

5

6

7

source <(kubectl completion bash)

alias k=kubectl

complete -F __start_kubectl k

echo 'source <(kubectl completion bash)' >> /etc/profile

echo 'alias k=kubectl' >> /etc/profile

echo 'complete -F __start_kubectl k' >> /etc/profile

k9s

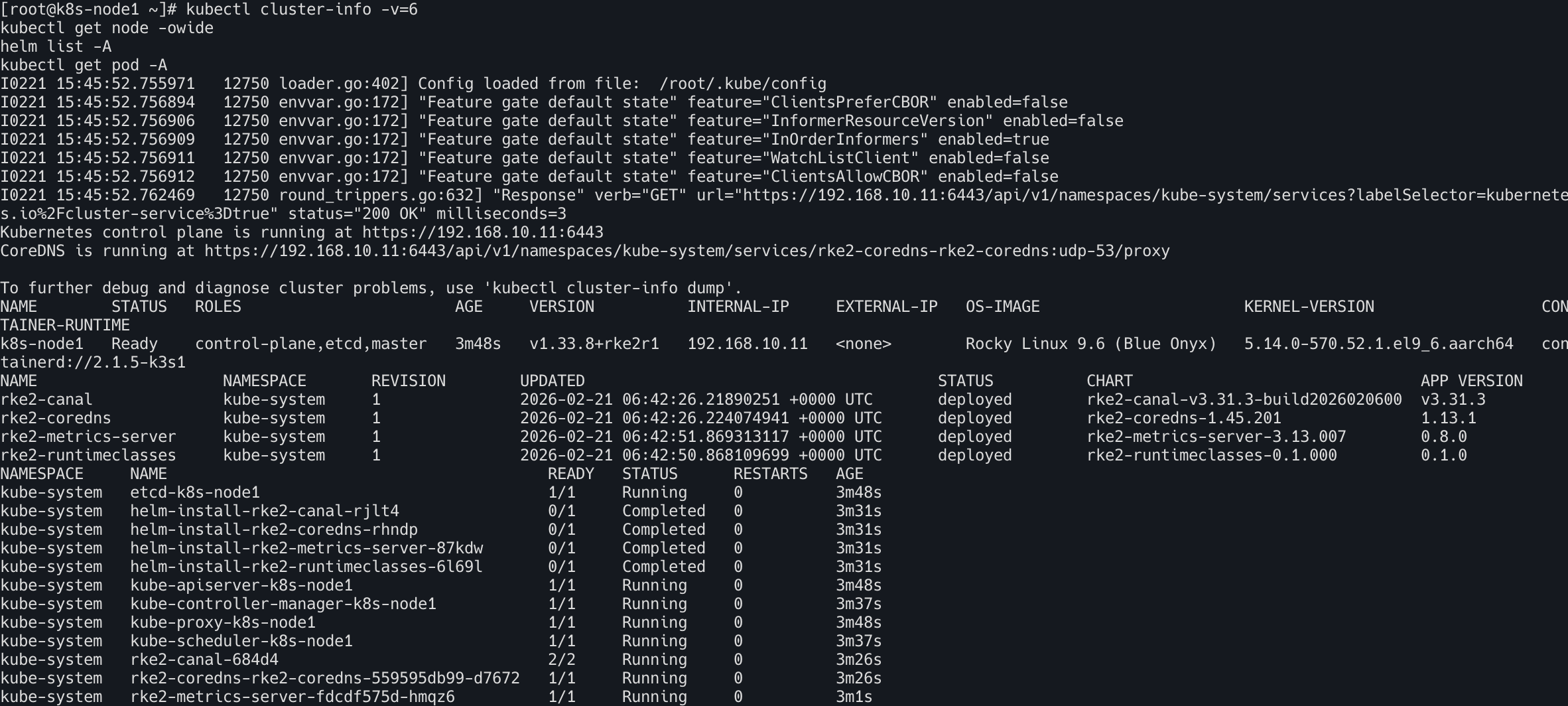

- 노드, 파드 정보 확인

1

2

3

4

kubectl cluster-info -v=6

kubectl get node -owide

helm list -A

kubectl get pod -A

RKE2 애드온 헬름차트 확인

1

tree /var/lib/rancher/rke2 -L 1

- 서버 디렉터리

1

2

tree /var/lib/rancher/rke2/server/

tree /var/lib/rancher/rke2/server/ -L 1

- 토큰

1

2

3

ls -l /var/lib/rancher/rke2/server/

cat /var/lib/rancher/rke2/server/node-token

cat /var/lib/rancher/rke2/server/token

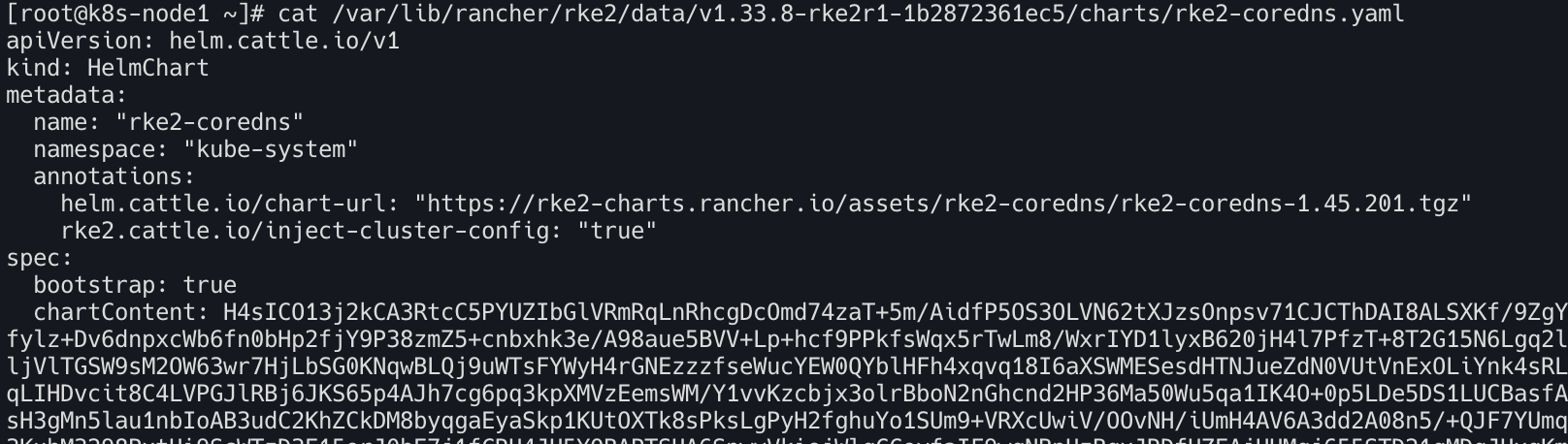

coredns의 헬름차트 매니페스트

1

cat /var/lib/rancher/rke2/server/manifests/rke2-coredns.yaml

- 확인

1

2

3

4

5

6

kubectl get crd | grep -E 'helm|addon'

kubectl get helmcharts.helm.cattle.io -n kube-system -owide

kubectl get job -n kube-system

kubectl get helmchartconfigs -n kube-system

kubectl describe helmchartconfigs -n kube-system rke2-canal

kubectl get addons.k3s.cattle.io -n kube-system

RKE2 인증서 확인

- k8s ca 인증서

1

cat /var/lib/rancher/rke2/server/tls/server-ca.crt | openssl x509 -text -noout

- apiserver web server 인증서

1

cat /var/lib/rancher/rke2/server/tls/serving-kube-apiserver.crt | openssl x509 -text -noout

헬름 차트 매니페스트 확인

data디렉터리

1

2

3

4

5

tree /var/lib/rancher/rke2/data/

tree /var/lib/rancher/rke2/data/ -L 2

└── v1.33.8-rke2r1-1b2872361ec5

├── bin # 핵심 구성요소 관련 바이너리 파일들

└── charts # helm charts by helm controller

1

cat /var/lib/rancher/rke2/data/v1.33.8-rke2r1-1b2872361ec5/charts/rke2-coredns.yaml

agent 디렉터리 확인

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

# agent 디렉터리

tree /var/lib/rancher/rke2/agent/ | more

tree /var/lib/rancher/rke2/agent/ -L 3

├── client-ca.crt # client 관련 kubeconfig 및 인증서 파일들

...

├── containerd # containerd root 디렉터리 , (설정값) root = "/var/lib/rancher/rke2/agent/containerd"

│ ├── bin

│ ├── containerd.log

│ ├── io.containerd.content.v1.content

│ │ ├── blobs

│ │ └── ingest

...

│ └── tmpmounts

├── etc # 주요 설정 파일

│ ├── containerd

│ │ └── config.toml

│ ├── crictl.yaml

│ └── kubelet.conf.d

│ └── 00-rke2-defaults.conf

├── images

│ ├── cloud-controller-manager-image.txt

│ ├── etcd-image.txt

│ ├── kube-apiserver-image.txt

│ ├── kube-controller-manager-image.txt

│ ├── kube-proxy-image.txt

│ ├── kube-scheduler-image.txt

│ └── runtime-image.txt

├── kubelet.kubeconfig # client 관련 kubeconfig 및 인증서 파일들

...

├── logs # kubelet 로그

│ └── kubelet.log

├── pod-manifests # static pod manifests

│ ├── cloud-controller-manager.yaml

│ ├── etcd.yaml

│ ├── kube-apiserver.yaml

│ ├── kube-controller-manager.yaml

│ ├── kube-proxy.yaml

│ └── kube-scheduler.yaml

...

crictl 파일 확인

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

crictl ps

cat /var/lib/rancher/rke2/agent/etc/crictl.yaml

runtime-endpoint: unix:///run/k3s/containerd/containerd.sock

ln -s /var/lib/rancher/rke2/agent/etc/crictl.yaml /etc/crictl.yaml

crictl ps

crictl images

## rke2 에서 config.toml 은 직접 수정 비권장 -> 직접 수정하면 rke2/k3s 재시작 시 덮어써짐!

cat /var/lib/rancher/rke2/agent/etc/containerd/config.toml

# File generated by rke2. DO NOT EDIT. Use config.toml.tmpl instead.

version = 3

root = "/var/lib/rancher/rke2/agent/containerd"

...

[plugins.'io.containerd.cri.v1.images'.registry]

config_path = "/var/lib/rancher/rke2/agent/etc/containerd/certs.d"

## containerd registry 설정 디렉터리/파일이 현재는 없음

ls -l /var/lib/rancher/rke2/agent/etc/containerd/certs.d

ls: cannot access '/var/lib/rancher/rke2/agent/etc/containerd/certs.d': No such file or directory

## 컨테이너 이미지 명

grep -H '' /var/lib/rancher/rke2/agent/images/*

/var/lib/rancher/rke2/agent/images/etcd-image.txt:index.docker.io/rancher/hardened-etcd:v3.6.7-k3s1-build20260126

/var/lib/rancher/rke2/agent/images/kube-apiserver-image.txt:index.docker.io/rancher/hardened-kubernetes:v1.34.3-rke2r3-build20260127

/var/lib/rancher/rke2/agent/images/kube-controller-manager-image.txt:index.docker.io/rancher/hardened-kubernetes:v1.34.3-rke2r3-build20260127

/var/lib/rancher/rke2/agent/images/kube-proxy-image.txt:index.docker.io/rancher/hardened-kubernetes:v1.34.3-rke2r3-build20260127

/var/lib/rancher/rke2/agent/images/kube-scheduler-image.txt:index.docker.io/rancher/hardened-kubernetes:v1.34.3-rke2r3-build20260127

/var/lib/rancher/rke2/agent/images/runtime-image.txt:index.docker.io/rancher/rke2-runtime:v1.34.3-rke2r3

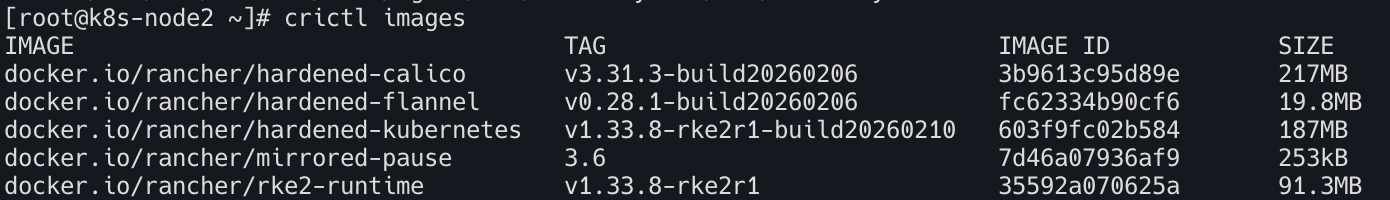

crictl images

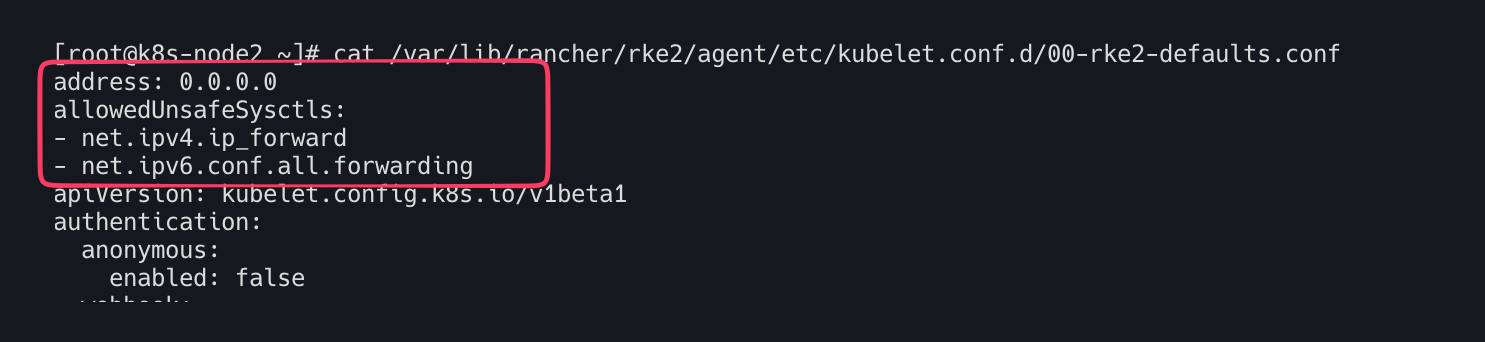

kubelet config 파일 확인

- 보안 권장 설정 및 엔터프라이즈 권장 설정 반영

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

cat /var/lib/rancher/rke2/agent/etc/kubelet.conf.d/00-rke2-defaults.conf

address: 192.168.10.11

apiVersion: kubelet.config.k8s.io/v1beta1

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /var/lib/rancher/rke2/agent/client-ca.crt

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

cgroupDriver: systemd

clusterDNS:

- 10.43.0.10

clusterDomain: cluster.local

containerRuntimeEndpoint: unix:///run/k3s/containerd/containerd.sock

cpuManagerReconcilePeriod: 10s

crashLoopBackOff: {}

evictionHard:

imagefs.available: 5%

nodefs.available: 5%

evictionMinimumReclaim:

imagefs.available: 10%

nodefs.available: 10%

evictionPressureTransitionPeriod: 5m0s

failSwapOn: false # 원래 쿠버네티스는 스왑(Swap) 메모리가 켜져 있으면 실행되지 않지만, RKE2는 이를 허용하도록 설정되어 있습니다.

fileCheckFrequency: 20s

healthzBindAddress: 127.0.0.1

httpCheckFrequency: 20s

imageMaximumGCAge: 0s

imageMinimumGCAge: 2m0s

kind: KubeletConfiguration

logging:

flushFrequency: 5s

format: text

options:

json:

infoBufferSize: "0"

text:

infoBufferSize: "0"

verbosity: 0

memorySwap: {}

nodeStatusReportFrequency: 5m0s

nodeStatusUpdateFrequency: 10s # 10초마다 "나 살아있어요"라고 컨트롤플레인 노드에 보고

resolvConf: /etc/resolv.conf

runtimeRequestTimeout: 2m0s

serializeImagePulls: false # 이미지 병렬 pull 허용

shutdownGracePeriod: 0s

shutdownGracePeriodCriticalPods: 0s

staticPodPath: /var/lib/rancher/rke2/agent/pod-manifests

streamingConnectionIdleTimeout: 4h0m0s

syncFrequency: 1m0s

tlsCertFile: /var/lib/rancher/rke2/agent/serving-kubelet.crt

tlsPrivateKeyFile: /var/lib/rancher/rke2/agent/serving-kubelet.key

volumeStatsAggPeriod: 1m0s

- kubelet 로그

1

tail -f /var/lib/rancher/rke2/agent/logs/kubelet.log

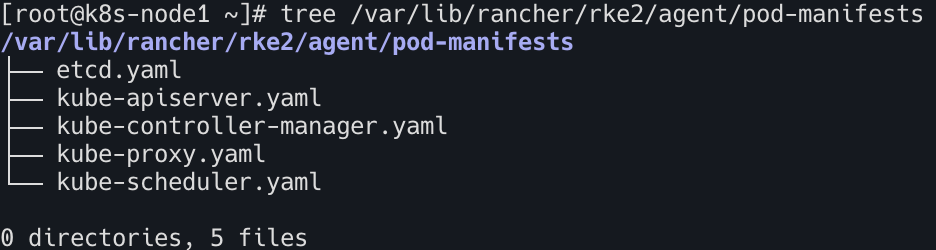

Static Pod 살펴보기

보안 권장 설정 및 엔터프라이즈 권장 설정 반영되어 있음

1

tree /var/lib/rancher/rke2/agent/pod-manifests

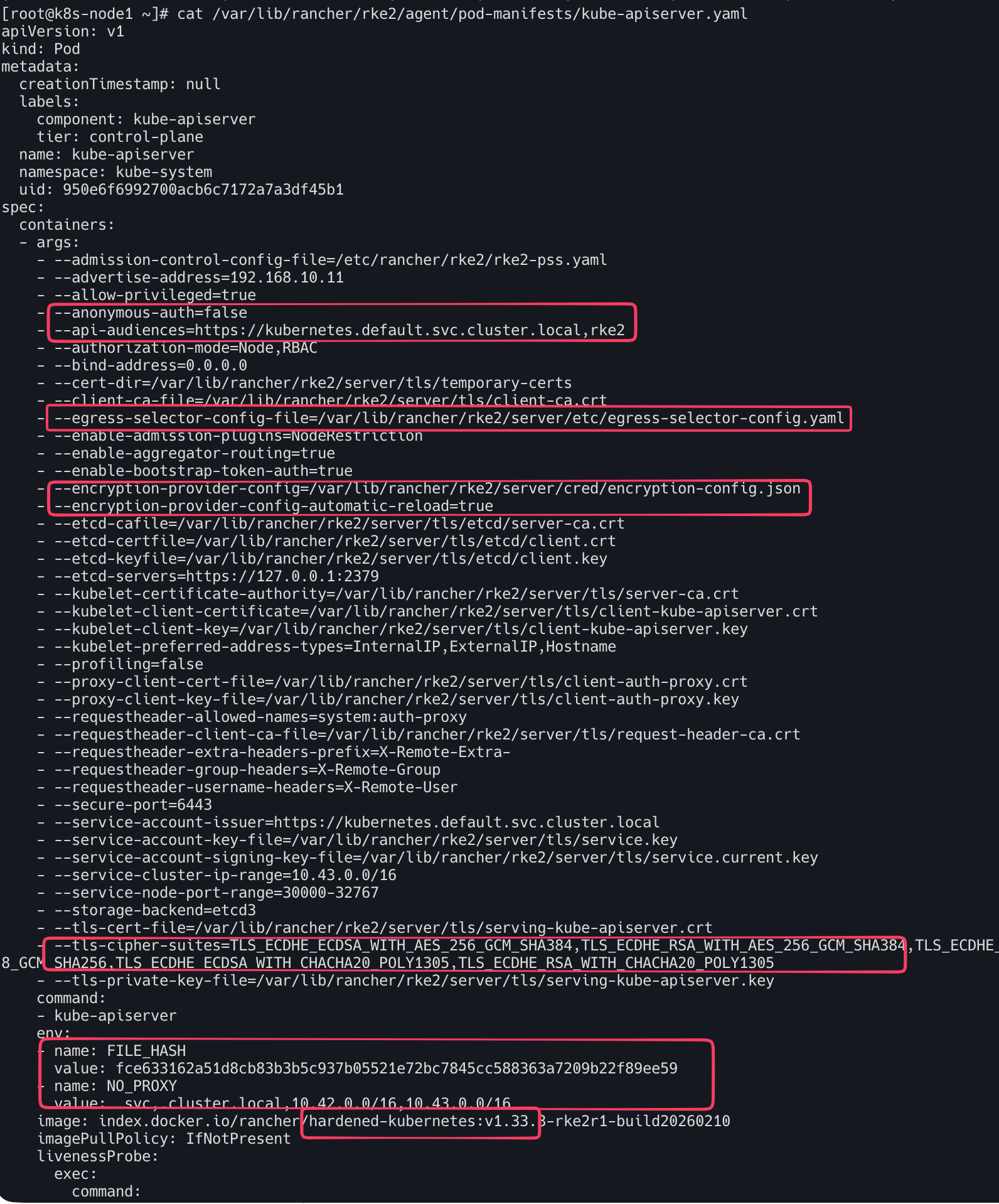

kube-apiserver

1

cat /var/lib/rancher/rke2/agent/pod-manifests/kube-apiserver.yaml

ETCD

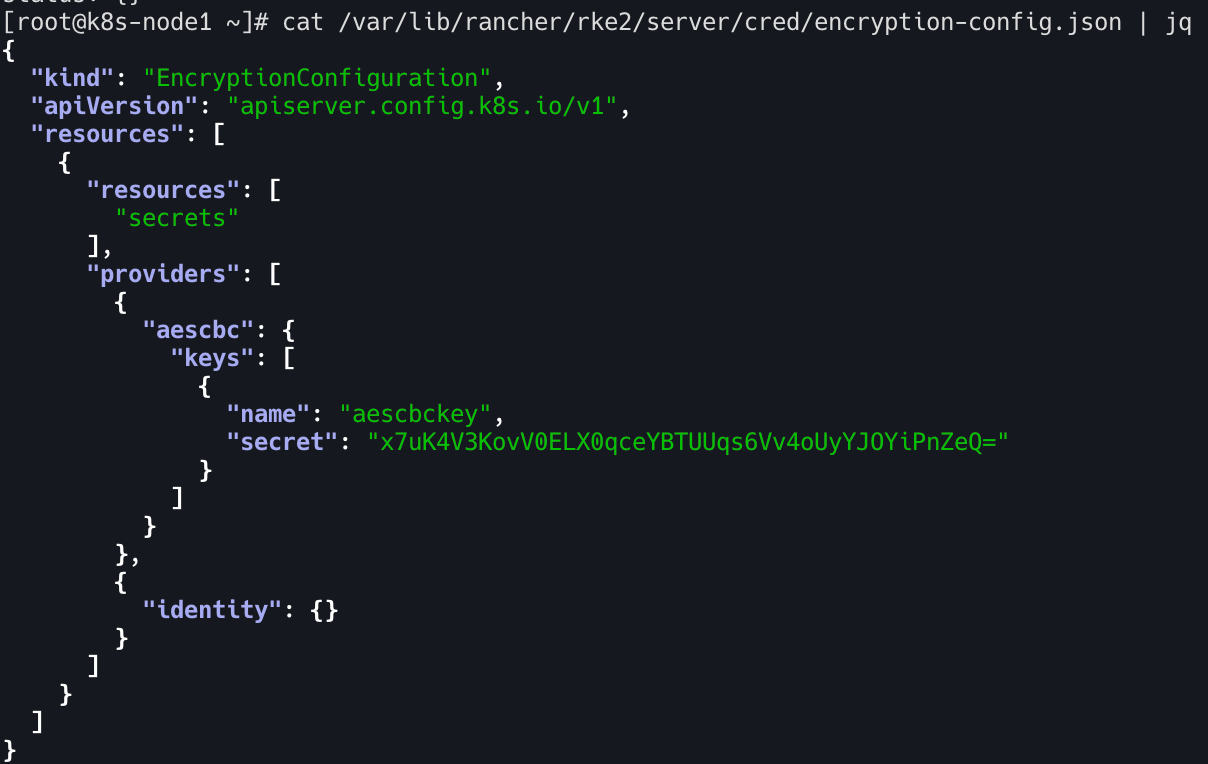

etcd데이터 암호화

1

cat /var/lib/rancher/rke2/server/cred/encryption-config.json | jq

암호화 방식 -> AES-CBC

- etcd: host volume 마운트 시 디렉터리 타입을 최소화하고, 개별 파일 타입을 적용

1

2

kubectl describe pod -n kube-system etcd-k8s-node1

cat /var/lib/rancher/rke2/agent/pod-manifests/etcd.yaml

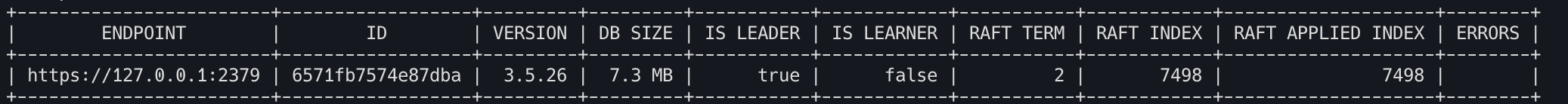

etcdctl

1

2

3

find / -name etcdctl 2>/dev/null

ln -s /var/lib/rancher/rke2/agent/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/1/fs/usr/local/bin/etcdctl /usr/local/bin/etcdctl

1

etcdctl version

1

2

3

4

5

6

etcdctl \

--endpoints=https://127.0.0.1:2379 \

--cacert=/var/lib/rancher/rke2/server/tls/etcd/server-ca.crt \

--cert=/var/lib/rancher/rke2/server/tls/etcd/client.crt \

--key=/var/lib/rancher/rke2/server/tls/etcd/client.key \

endpoint status --write-out=table

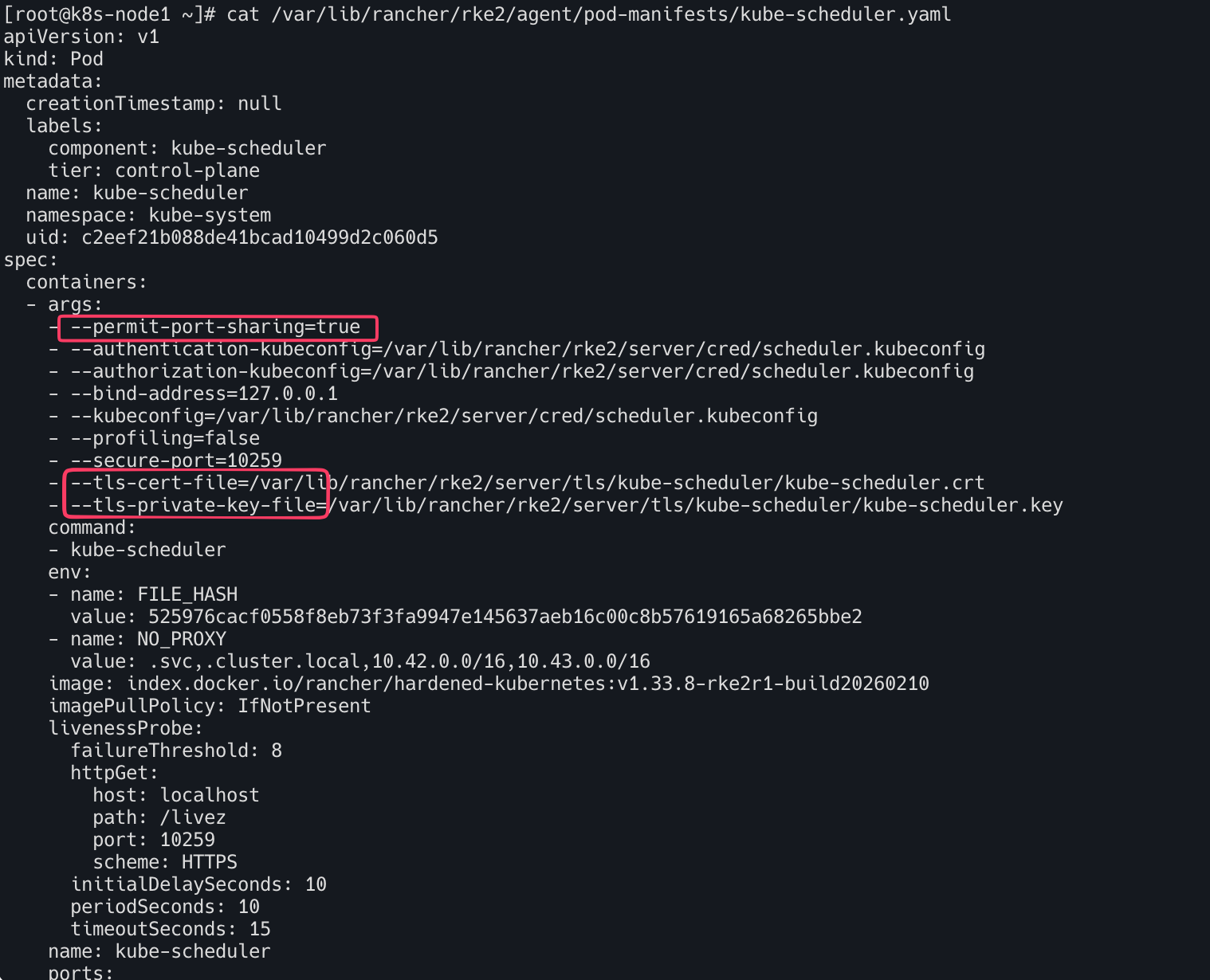

kube-scheduler

1

2

kubectl describe pod -n kube-system kube-scheduler-k8s-node1

cat /var/lib/rancher/rke2/agent/pod-manifests/kube-scheduler.yaml

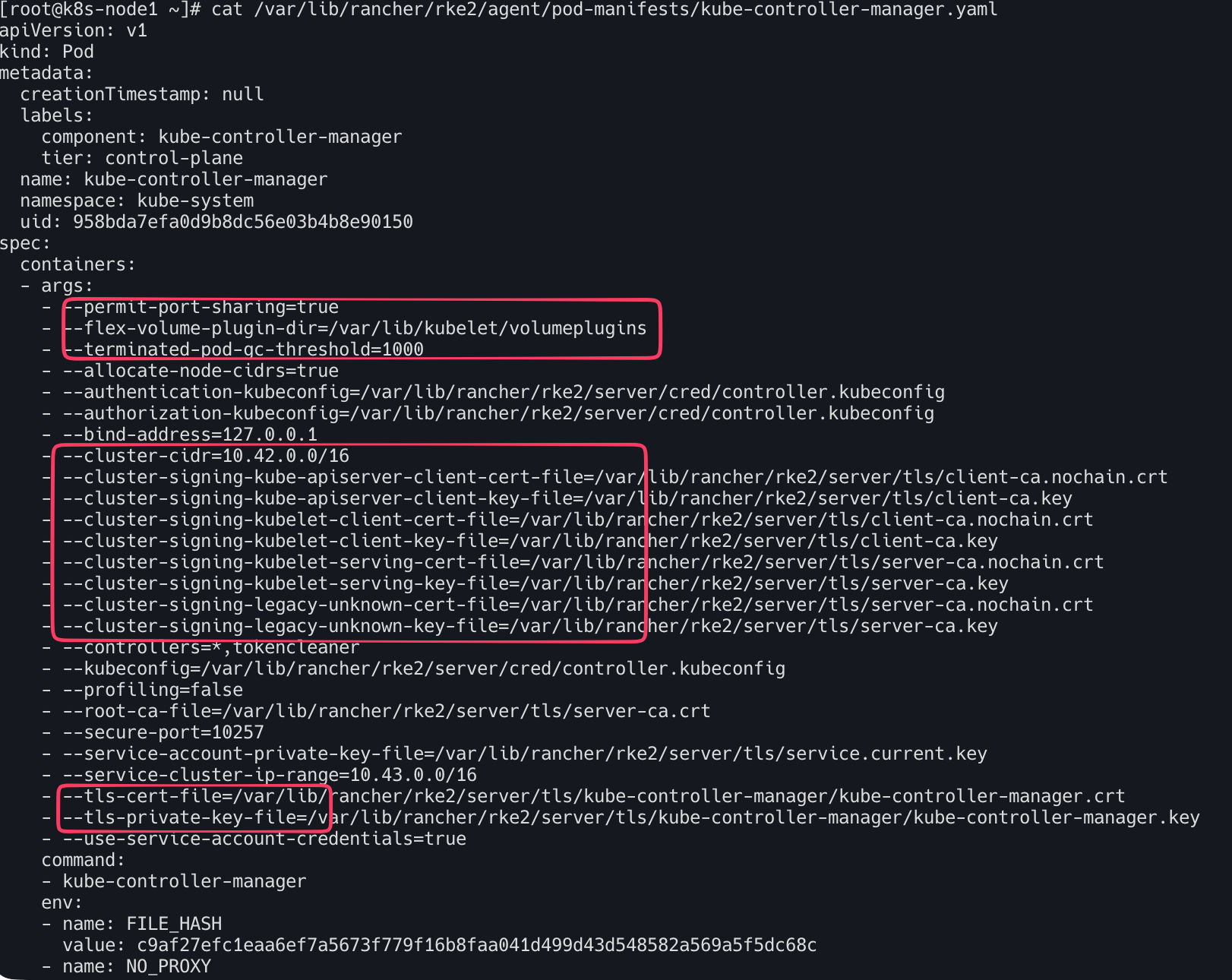

kube-controller-manager

1

cat /var/lib/rancher/rke2/agent/pod-manifests/kube-controller-manager.yaml

RKE2의 컨트롤러 매니저는 클러스터 내부의 인증서 발급기 역할도 수행한다. (인증서 발급 및 서명 - CA 역할)

--cluster-signing-...- Kubelet이 서버 인증서를 요청하거나, API 서버 클라이언트가 인증서를 요청할 때 어떤 CA 키와 인증서로 서명해줄지 지정한다.

--root-ca-file- 서비스 계정(Service Account) 토큰 등을 검증할 때 사용할 루트 인증서

--service-account-private-key-file

1

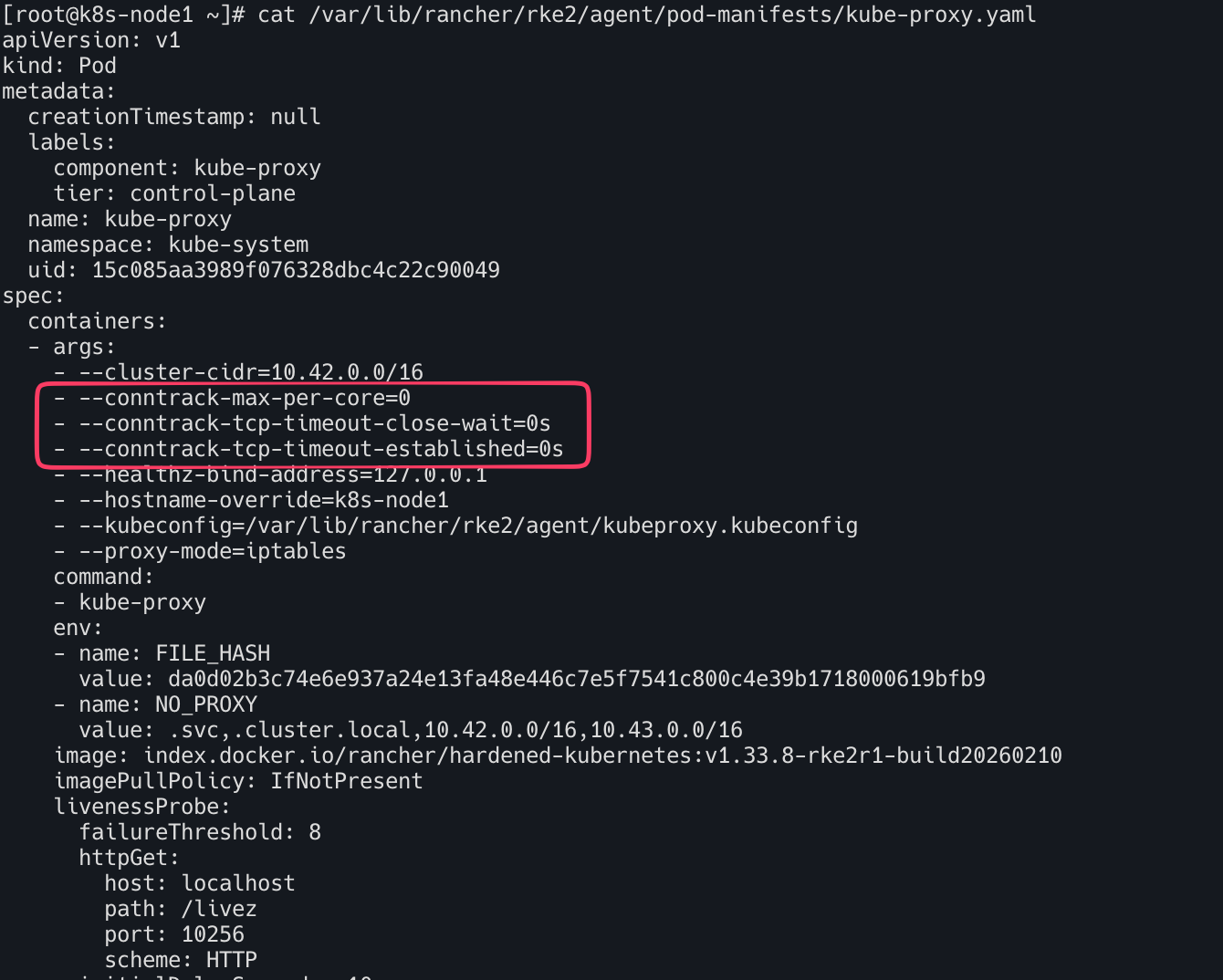

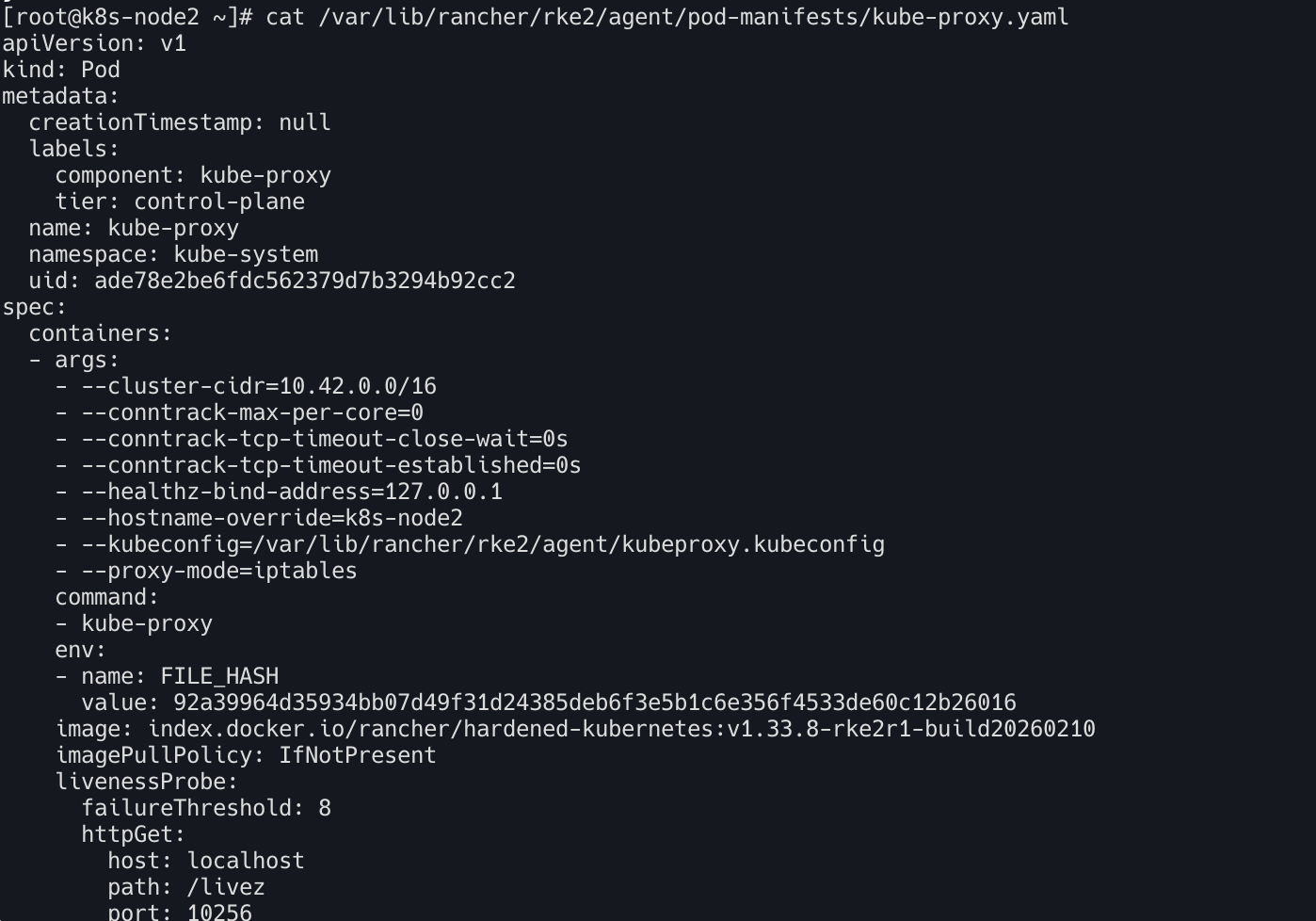

cat /var/lib/rancher/rke2/agent/pod-manifests/kube-proxy.yaml

- conntrack 관련 튜닝 : Service 트래픽이 많은 환경에서 세션 끊김 방지 / 대규모 연결 유지 최적화

1

2

3

--conntrack-max-per-core=0 : 코어당 conntrack 최대값 제한 없음, 대규모 트래픽 환경에서 NAT 테이블 부족 방지

--conntrack-tcp-timeout-close-wait=0s : ESTABLISHED 상태 timeout 무제한

--conntrack-tcp-timeout-established=0s : CLOSE_WAIT 상태 timeout 무제한

노드 관리

컨트롤 플레인에서 노드 토큰 확인

1

2

cat /var/lib/rancher/rke2/server/node-token

# K1087485e340b93072c09e6f7b180853499d23f217a914972d536b5df300c5305c5::server:7312ae8d5d733a574a433748d5e9591b

- 노드(서버/에이전트)가 RKE2 클러스터에 조인할 때 사용하는 전용 관리/부트스트랩 API 포트 확인

1

ss -tnlp | grep 9345

- 모니터링

1

watch -d 'kubectl get node; echo; kubectl get pod -n kube-system'

워커 노드 추가

- 인스톨러 설치 및 토큰 설정

1

2

3

4

5

curl -sfL https://get.rke2.io | INSTALL_RKE2_TYPE="agent" INSTALL_RKE2_CHANNEL=v1.33 sh -

# Configure the rke2-agent service : rke-agent 설정 파일 작성

## The rke2 server process listens on port 9345 for new nodes to register.

TOKEN=<TOKEN>

- rke-agent 설정을 위한 config 파일 작성

1

2

3

4

5

mkdir -p /etc/rancher/rke2/

cat << EOF > /etc/rancher/rke2/config.yaml

server: https://192.168.10.11:9345

token: $TOKEN

EOF

- 확인

1

cat /etc/rancher/rke2/config.yaml

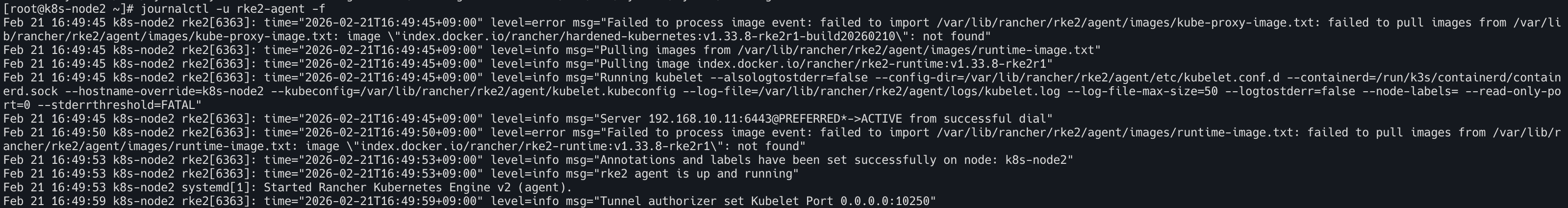

- 서비스 활성화 및 시작

1

2

systemctl enable --now rke2-agent.service

journalctl -u rke2-agent -f

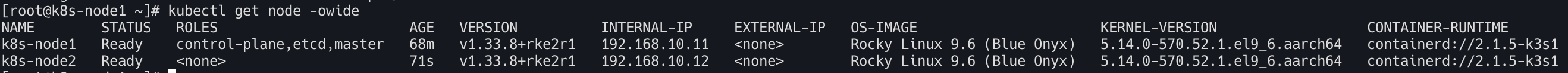

k8s-node1컨트롤 플레인에서 확인

1

2

kubectl get node -owide

kubectl get pod -n kube-system -owide | grep k8s-node2

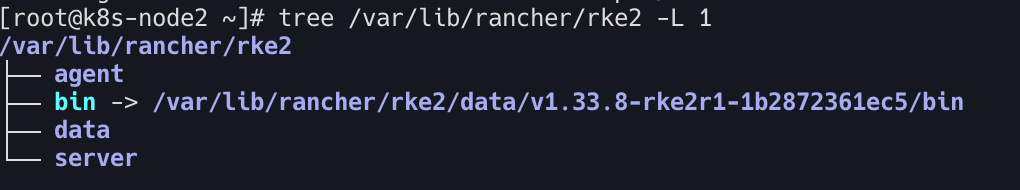

k8s-node2(워커노드) 에서 확인

1

2

3

4

5

tree /var/lib/rancher/rke2 -L 1

├── agent

├── bin -> /var/lib/rancher/rke2/data/v1.34.3-rke2r3-5b8349de68df/bin # 바이너리 파일

├── data # bin, charts

└── server # 서버 역할이 아니여서 아무것도 없음

rke2-agent

1

2

3

systemctl status rke2-agent.service --no-pager

cat /usr/lib/systemd/system/rke2-agent.service

pstree -al

- PATH 안 건드리고 표준 위치로 노출 설정 : 심볼릭 링크 방식

1

2

3

4

5

ln -s /var/lib/rancher/rke2/bin/containerd /usr/local/bin/containerd

ln -s /var/lib/rancher/rke2/bin/crictl /usr/local/bin/crictl

ln -s /var/lib/rancher/rke2/agent/etc/crictl.yaml /etc/crictl.yaml

crictl ps

crictl images

1

2

3

4

5

6

tree /etc/rancher/

├── node

│ └── password

└── rke2

├── config.yaml

└── rke2-pss.yaml

- agent 디렉터리

1

tree /var/lib/rancher/rke2/agent/ -L 3

- containerd의

config.toml파일

1

cat /var/lib/rancher/rke2/agent/etc/containerd/config.toml

- kubelet의 conf파일

1

cat /var/lib/rancher/rke2/agent/etc/kubelet.conf.d/00-rke2-defaults.conf

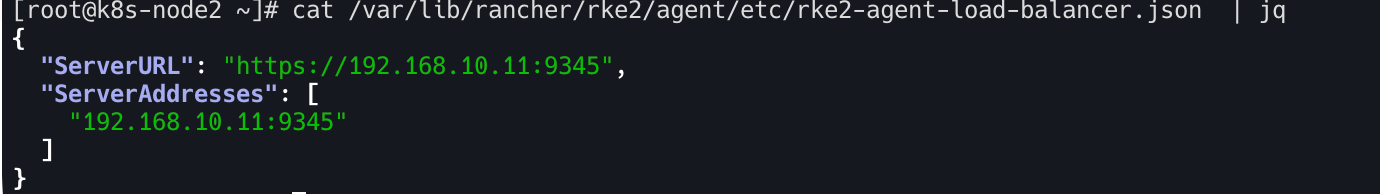

- 워커 노드를 컨트롤 플레인에 등록 시 서버 주소

1

cat /var/lib/rancher/rke2/agent/etc/rke2-agent-load-balancer.json | jq

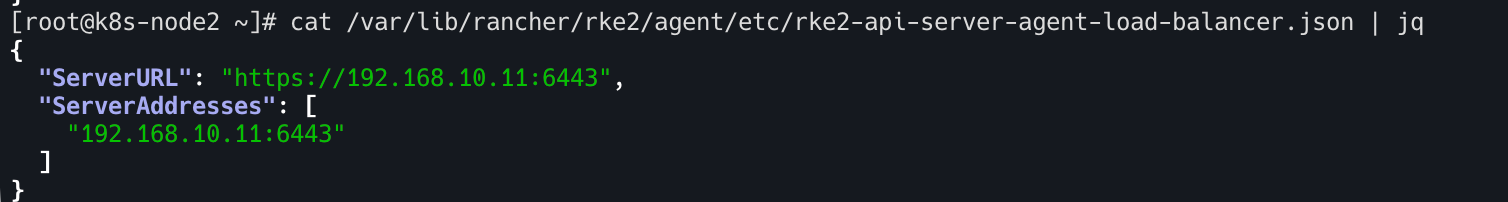

- 컨트롤 플레인 k8s apiserver 서버 주소

1

cat /var/lib/rancher/rke2/agent/etc/rke2-api-server-agent-load-balancer.json | jq

- static pod :

kube-proxy

1

cat /var/lib/rancher/rke2/agent/pod-manifests/kube-proxy.yaml

워커노드 삭제하기

1

2

3

4

5

6

7

8

9

10

11

12

# RKE2 서비스 중지

systemctl stop rke2-agent

# 삭제 스크립트 확인

ls -l /usr/bin/rke2*

# RKE2 삭제 스크립트 실행 : 컨테이너, 네트워크 인터페이스, 관련 파일을 정리

cat /usr/bin/rke2-uninstall.sh

# 관련 디렉터리 삭제 확인

tree /etc/rancher

tree /var/lib/rancher

샘플 애플리케이션 배포

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

cat << EOF | kubectl apply -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: webpod

spec:

replicas: 2

selector:

matchLabels:

app: webpod

template:

metadata:

labels:

app: webpod

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- sample-app

topologyKey: "kubernetes.io/hostname"

containers:

- name: webpod

image: traefik/whoami

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: webpod

labels:

app: webpod

spec:

selector:

app: webpod

ports:

- protocol: TCP

port: 80

targetPort: 80

nodePort: 30000

type: NodePort

EOF

- 반복 호출

1

while true; do curl -s http://192.168.10.12:30000 | grep Hostname; date; sleep 1; done

이 기사는 저작권자의 CC BY 4.0 라이센스를 따릅니다.