Load-balancing & service decovery

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

| cat << EOF | kubectl apply --context kind-west -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: webpod

spec:

replicas: 2

selector:

matchLabels:

app: webpod

template:

metadata:

labels:

app: webpod

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- sample-app

topologyKey: "kubernetes.io/hostname"

containers:

- name: webpod

image: traefik/whoami

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: webpod

labels:

app: webpod

annotations:

service.cilium.io/global: "true"

spec:

selector:

app: webpod

ports:

- protocol: TCP

port: 80

targetPort: 80

type: ClusterIP

EOF

cat << EOF | kubectl apply --context kind-east -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: webpod

spec:

replicas: 2

selector:

matchLabels:

app: webpod

template:

metadata:

labels:

app: webpod

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- sample-app

topologyKey: "kubernetes.io/hostname"

containers:

- name: webpod

image: traefik/whoami

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: webpod

labels:

app: webpod

annotations:

service.cilium.io/global: "true"

spec:

selector:

app: webpod

ports:

- protocol: TCP

port: 80

targetPort: 80

type: ClusterIP

EOF

|

1

2

3

4

5

6

7

8

9

| kwest get svc,ep webpod && keast get svc,ep webpod

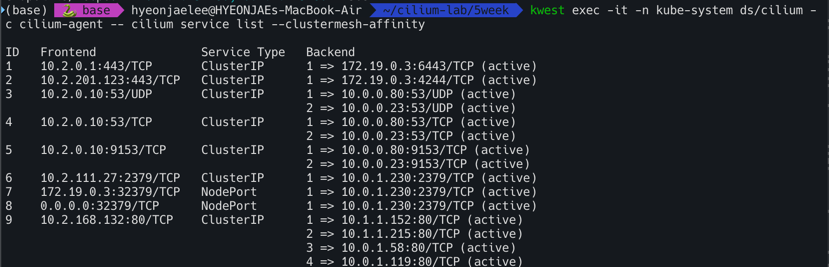

kwest exec -it -n kube-system ds/cilium -c cilium-agent -- cilium service list --clustermesh-affinity

keast exec -it -n kube-system ds/cilium -c cilium-agent -- cilium service list --clustermesh-affinity

kubectl exec -it curl-pod --context kind-west -- ping -c 1 10.1.1.190

kubectl exec -it curl-pod --context kind-east -- ping -c 1 10.0.1.224

kubectl exec -it curl-pod --context kind-west -- sh -c 'while true; do curl -s --connect-timeout 1 webpod ; sleep 1; echo "---"; done;'

kubectl exec -it curl-pod --context kind-east -- sh -c 'while true; do curl -s --connect-timeout 1 webpod ; sleep 1; echo "---"; done;'

|

1

2

3

4

5

6

7

8

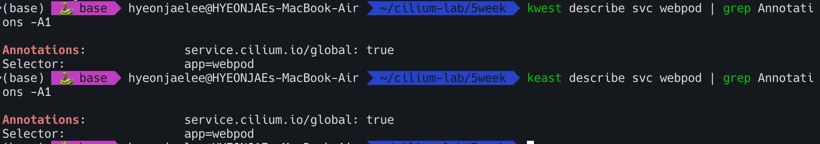

| # 현재 Service Annotations 설정

kwest describe svc webpod | grep Annotations -A1

Annotations: service.cilium.io/global: true

Selector: app=webpod

keast describe svc webpod | grep Annotations -A1

Annotations: service.cilium.io/global: true

Selector: app=webpod

|

frr 컨테이너를 배포(bgp config 주입)

1

| docker network ls | grep kind-west

|

west-control-plane의 컨테이너 ip주소는 아래와 같이 172.19.0.3 이다. 이건 Kind 클러스터의 컨트롤 플레인 노드의 IP 주소(Cilium BGP 컨트롤 플레인이 실행되는 곳)이며 FRR이 BGP 피어링을 맺을 대상 주소이다.

1

2

3

| docker inspect -f '' west-control-plane

# 172.19.0.3

|

3.1 FRR 설정 파일 생성

FRR이 Cilium과 BGP 피어링을 맺을 설정을 미리 작성한다.

1

2

3

4

5

6

7

8

9

10

11

12

13

| cat <<EOF > frr.conf

!

router bgp 65001

bgp router-id 1.1.1.1

neighbor 172.19.0.3 remote-as 64512

neighbor 172.19.0.3 ebgp-multihop 255

address-family ipv4 unicast

network 10.0.0.0/16

network 10.2.0.0/16

redistribute connected

exit-address-family

!

EOF

|

- 65001 : FRR의 AS번호

- 172.19.0.3 : Cilium 노드의 IP (kind 네트워크에서 control-plane IP)

- 64512 : Cilium 의 AS 번호

3.2 FRR 컨테이너 실행

1

2

3

4

5

| docker run -d --rm --name frr \

--network=kind-west \

--privileged \

-v "$(pwd)/frr.conf":/etc/frr/frr.conf \

frrouting/frr:latest

|

- –network=kind_west : kind 클러스터와 FRR이 동일한 네트워크에 있도록 연결

- –privileged : FRR이 라우팅 테이블을 조작할 수 있도록 권한 부여

3.3 FRR IP 주소 확인

FRR 컨테이너가 Kind네트워크에서 어떤 IP 주소를 할당 받았는지 확인

1

2

3

| docker inspect -f '' frr

# 172.19.0.4

|

Cilium BGP Peering Policy 설정

4.1 CiliumBGPPeeringPolicy 생성

Cilium이 FRR과 BGP 피어링을 맺는다.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

| # bgp-policy.yaml

apiVersion: cilium.io/v2alpha1

kind: CiliumBGPPeeringPolicy

metadata:

name: bgp-peering-policy

spec:

virtualRouters:

- localASN: 64512

neighbors:

- peerAddress: 172.19.0.4/32 # <--- IP 주소를 CIDR 형식으로 변경

peerASN: 65001

exportPodCIDR: true

serviceSelector:

matchLabels:

bgp: "true"

|

4.2 CiliumLoadBalancerIPPool 생성

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

| docker network inspect kind-west

[

{

"Name": "kind",

...

"IPAM": {

"Driver": "default",

"Config": [

{

"Subnet": "172.18.0.0/16", <-- **이 부분이 바로 FRR과 Kind가 공유하는 서브넷입니다.**

"Gateway": "172.18.0.1"

}

]

},

...

}

]

|

1

2

3

4

5

6

7

8

| # lb-pool.yaml

apiVersion: cilium.io/v2alpha1

kind: CiliumLoadBalancerIPPool

metadata:

name: lb-pool

spec:

blocks:

- cidr: "172.18.0.240/28" # FRR과 Kind 노드가 사용하는 서브넷과 동일하게 설정

|

테스트용 어플리케이션 및 서비스 배포

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

| # app-service.yaml

apiVersion: v1

kind: Service

metadata:

name: sample-app

labels:

bgp: "true" # BGP 정책과 일치하는 레이블

spec:

type: LoadBalancer # Cilium이 IP를 할당하도록 지정

ports:

- port: 80

targetPort: 8080

selector:

app: sample-app

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: sample-app

spec:

replicas: 2

selector:

matchLabels:

app: sample-app

template:

metadata:

labels:

app: sample-app

spec:

containers:

- name: sample-app

image: nginxdemos/hello:plain-text

ports:

- containerPort: 80

|